The hidden world of data annotators: who’s doing the grunt work behind your AI’s “personality,” and at what cost

Inside the Profits, Pressures, and Paradox of Human AI Trainers

You use chatbots to ask questions. Tell them jokes. Maybe even spill out your hopes, your fears. What you don’t see: the humans behind all that. The people who train them. The people who correct them. The people who make them sound… more human.

This isn’t sci-fi. It’s now. The rise of AI, especially generative models, depends heavily on invisible labor. Workers distributed around the world—annotators, data labelers, moderators—are feeding AI its personality, moral compass, even manners. But the deeper you look, the messier things get. Stunning profits. Stark moral grey zones. Unpredictability. Disturbing content. And often, those doing the work are those with the least power to negotiate.

If you care about tech, justice, money, or human dignity—this matters.

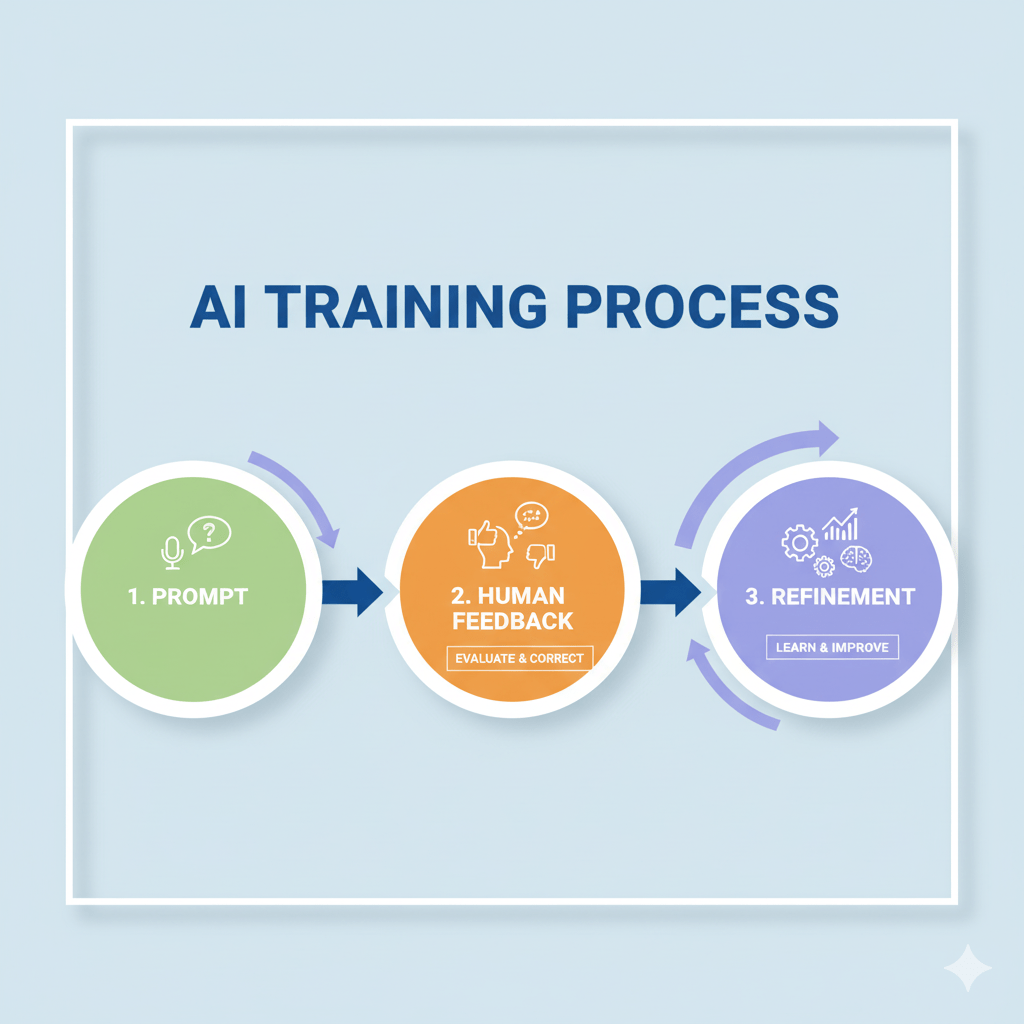

What’s Going On: What Human AI Trainers Actually Do

Based heavily on a recent exposé from Business Insider, here’s what human AI trainers do, how they do it, why it’s both lucrative and disturbing.

- Labeling and auditing: Reading chatbot responses to prompts (“If you were a pizza topping, what would you be?” or “Recall your earliest childhood memory”) and judging if they’re helpful, accurate, concise, natural-sounding—or offensive, rambling, robotic.

- Red-teaming: Trying to make the model screw up. Pushing it with dangerous prompts (bomb manuals, how to avoid law enforcement, sexual abuse, etc.) to see how it responds. The more it “breaks,” often the higher the pay.

- Content moderation & safety: Identifying hate speech, slurs, racism, or other kinds of “unsafe” content in both text, audio, or image datasets. Sometimes dealing with video or photo content as well.

- Verbal / voice work: Recording voice clips or conversations in many languages for voice-data projects. Playing roles, speaking on prompts, sometimes in group calls.

Money, Flexibility—and the Flip Sides

Yes, there’s money. Yes, there’s flexibility. But no, it’s not all “work from home, sipping tea, making bank.” Let me break down both sides:

The Upsides

- Potential for good earnings: Some trainers are making thousands per month. One story: Isaiah Kwong-Murphy, a college student, made over $50,000 in six months working on projects with Outlier.

- Flexibility & remote work: Many of these gigs let people work from home or anywhere. For people in locations with lower cost of living (or flexible lifestyles), this can be a boost. Night shifts, odd hours, but lots of freedom. No commute, sometimes no rigid schedule.

- Skill building: You get better at spotting bias, understanding language, navigating complex moral/ethical prompts. If you’re into linguistics, philosophy, psychology, even policy, this is some crash course stuff. You learn what “safe vs unsafe output” means. You see behind the curtain.

The Downsides

- Pay instability & sudden rate changes: What you think you’re earning can drop out from under you. One worker saw their rate go from $50/hour to $15/hour without much warning.

- Project droughts and irregular work: Sometimes there just aren’t assignments. Platforms shift clients, pause projects, or change what they need. Workers can go from busy to nothing.

- Disturbing content & moral burden: Reading or being asked to trigger extremely harmful or explicit content is part of the job. It takes a toll. Some tasks seem almost exploitative, pushing workers to provoke chatbot misbehavior. Lack of transparency: Often workers don’t know the ultimate purpose of what they’re doing. Is this for benevolent application? Surveillance? Something more sinister? Clients hide behind NDAs, company secrecy.

- Risk of displacement: As models improve, they need less “raw” human feedback. More specialized people. Fewer general tasks. Means a lot of people in this line might find the work disappears or becomes more demanding just to stay in.

Who’s Doing the Work—and Who’s Profiting

The people who annotate are often spread globally. Some are in rich countries; many are in places where wages are lower. The platforms they work through—Outlier, Scale AI, XAI, OpenAI, others—Get the profits. Clients: big tech, research labs, governments, startups.

A few highlights:

- Meta bought 49% stake in Scale AI, worth $14.3 billion.

- Some annotators in places like Kenya are paid extremely low rates (e.g. a few dollars for tasks that take a good chunk of time).

So there’s a power imbalance. The ones doing emotionally heavy labor—filtering out hate, processing traumatic prompts—get little visibility and often little protection.

The Ethical Quicksand

This work isn’t just about pay. It implicates every one of us in deeper ethical questions:

- Surveillance & privacy: Some tasks involve gathering vast amounts of images, metadata, voice data (accents, expressions, even selfies from multiple angles). Sometimes people don’t know where that data ends up.

- Bias & cultural framing: Who defines what’s “offensive”? Who’s deciding what’s “normal English,” or proper behavior, or moral boundaries? If people from narrow backgrounds are in charge, the AI can reflect narrow values.

- Emotional cost: Seeing or being asked to handle extremely troubling content can harm mental wellness. Workers sometimes have little support or aftercare.

- What if your job is made obsolete by AI?: It’s ironic. The tech built by data-annotators helps build systems that may reduce need for them.

What It Means for Us—Especially Black Millennial Women

Because yes, I want you to see how this stuff connects to you (beyond “this is interesting tech news”).

- Opportunity, but tread carefully: These jobs can offer income streams, especially digital, remote, flexible ones. If you’re hustling, side gigs, into tech-adjacent work, you might see this as a chance.

- But know the risks: inconsistent pay, moral weight, minimal protections.

- Negotiating power is weak: As with gig work in general, there’s often minimal recourse. If rates drop, or projects vanish, or instructions change, workers usually just absorb. This is worse for people without resources to buffer such shocks.

- Voice & representation matters: If AI is going to be part of our everyday world (which it already is), then representation among those building and training it is essential. To ensure cultural nuance, mental health sensitivity, fair depiction of Blackness and Black women, varied body types, dialects, identities. If we’re not present in the room (or on the annotation platform), the systems will misrepresent, erase, misuse us.

- Self care & boundaries: If you ever take on this kind of work—or see someone close to you doing it—you need coping strategies. The content is not always pretty. You deserve mental rest, decent pay for harm, etc.

What Should Change — What We Should Demand

It’s not enough to just observe. If you care about justice, fairness, or simply human dignity, here are things that need to shift:

1. Better pay & stable rates

Transparent compensation. If a project demands more emotional labor, more difficult content, then pay should reflect that. No surprise rate cuts without justification.

Platforms should provide or fund resources: therapy, peer support, debriefing sessions. You can’t ask someone to wade through darkness without support.

3. Transparency & accountability

Workers should know: what they are building, how their data is used, who the ultimate end-users might be. NDAs are fine in some contexts—but not when they hide potential misuse.

4. Regulation & labor protections

Some national laws already protect gig workers; but many annotators are global, remote, and fall outside protections. We need global standards, or at least stronger platform policies.

5. Diversifying the human loop

Ensure a variety of backgrounds, languages, lived experiences among annotators so that the resulting AI isn’t monocultural, biased, or tone-deaf.

The Moral Mirror: What We’re All Building

Ironic twist: the better AI becomes at being human, the more it reflects our worst and best. When an AI’s voice is kind, helpful, inclusive, that’s because someone labored (often invisibly) to teach it those values. When it fails, it’s because the values, context, oversight failed.

If you talk to your AI: what are you hearing? What assumptions are built in? What voices are silent?

Knowing this world exists helps us demand better. Demand tech that respects people, not just profits. Because at the end of the day, “AI” is just many humans disguised in algorithmic clothes.

Your Takeaway

If you ever think of picking up data-annotation / AI trainer work, I want you walking in eyes open:

- Ask upfront: what kind of content will you deal with? Will you need to trigger unsafe content? Will you get support?

- Research the platform: what’s the reputation? Stability? How often do rates change?

- Document your work: keep track of hours, content, how it’s making you feel. So you can protect yourself.

- Look for community: people already doing this work have insights, warnings, hacks.

Where Things Look Like Going

- Big tech is slowly bringing more of this work in-house. Fewer faceless contractors for safety and alignment tasks.

- “Reasoning” models require more specialized, expensive labor—lawyers, doctors, domain experts. Generalist work may shrink.

- Workers will push for more rights: pay transparency, mental health resources, ethical guardrails. Platforms will have to respond, or risk backlash.

The world of humans training AI chatbots is part wonder, part warning. There’s opportunity there. There’s also risk, exploitation, emotional labor, and moral ambiguity. For Black millennial women, for writers, creators, thinkers—it’s another frontier. One we can inhabit, shape, and demand justice in.

Stay curious. Stay critical. Because the benefit of this tech should include you, not just the corporations calling the shots.

References / Links:

- “Inside the lucrative, surreal, and disturbing world of AI trainers,” Business Insider, Sept 7, 2025.

- Outlier / Scale AI / Meta investment news: Meta bought 49% of Scale AI for $14.3B.

- Fairwork Cloudwork Ratings report on gig workers in data labeling.

Leave a comment